Aims of Project

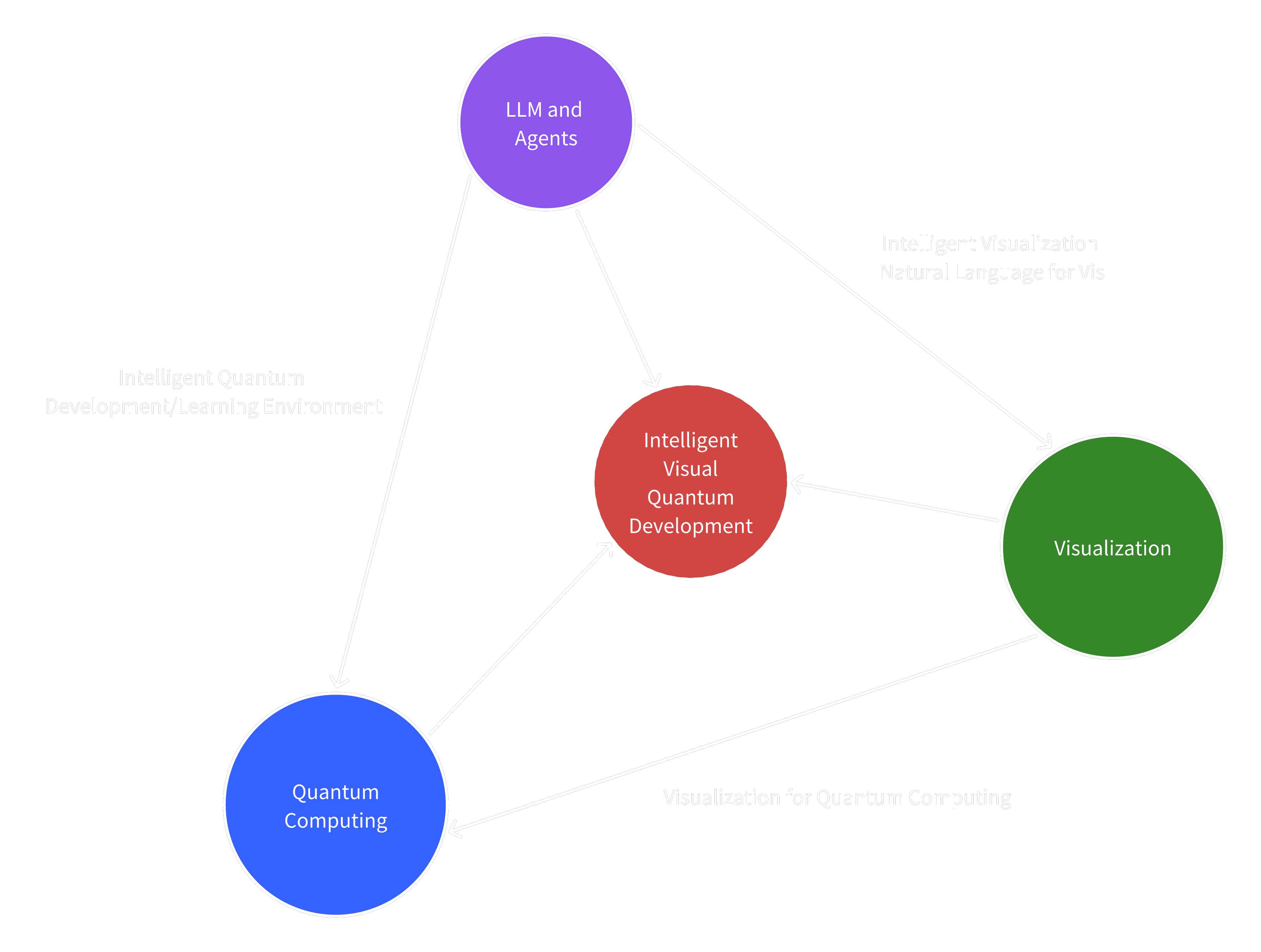

Figure 1:

The three pillars of the project are Quantum Computing, LLM and Agents, and Visualization. Valuable challenges lie in the intersections of these pillars.

Background

The intersection of quantum computing, large language models (LLMs), and visualization presents unprecedented opportunities and challenges in computer science.

Quantum computing, while promising revolutionary computational capabilities, remains largely inaccessible due to its inherent complexity and the steep learning curve associated with quantum software development.

Meanwhile, recent advances in LLMs have shown remarkable potential in natural language understanding, code generation and even reasoning. Armed with auxiliary long-term memory stores and external tools, LLM agents for programming (e.g., Devin and ChatDev) have made remarkable strides in benchmarks (e.g., SWEBench) of (classical) software development, opening the door to revolutionize development workflows.

Yet, application of LLMs and agents in quantum computing remains largely unexplored. Simple adaption of existing programming agents is not sufficient, as quantum algorithms have different operators (i.e., quantum gates and equivalent unitary operations), algorithmic building blocks (e.g., quantum Fourier transform and Glover's Search), domain-specific languages (e.g., Qiskit, QASM) and infrastructures (e.g., quantum simulators and quantum computers), which current LLM agents have not been extensively trained on and evaluated for.

Visualization, particularly in quantum computing, faces unique challenges in representing multi-qubit systems and quantum algorithms intuitively. Current NL2VIS systems lack comprehensive evaluation frameworks, and they only offers one-shot solutions that are not context-aware or personalized. However, we humans gain insights from not only visualizations but also following conversations and thereafter iterative exploration that is conversational and/or visual. There's a pressing need for visualization approaches that are more conversational, iterative and personalized.

My background in LLM agent development, scientific visualization and GPU programming, combined with the strength of Professor Yong Wang in data visualization and visualization for quantum computing and NTU's strength in quantum computing research, positions this project to address these challenges through an innovative integration of these three domains.

Aims and Significance

This research proposes a comprehensive framework that leverages the synergies between quantum computing, LLMs, and visualization to advance quantum software development and education. The project addresses three key intersections, ultimately converging on an integrated solution:

-

Quantum Computing + LLM Agents: Intelligent Quantum Development Environment

- Develop specialized quantum programming assistants that combine LLM-powered code generation with context-aware visualizations

- Create interactive debugging tools that provide natural language explanations of quantum states and operations

- Implement intelligent learning experiences and pathways for quantum computing concepts

- Develop benchmarks for evaluating LLMs' quantum understanding capabilities

-

Visualization + LLM Agents: Natural Language Visualization Interface

- Develop intelligent visualization systems that respond to natural language queries, along with composable visualization components that agents can use

- Create context-aware visualization tools that adapt to user expertise levels and even spark insights in conversations

- Implement explanation-driven visualization generation for quantum concepts

- Develop benchmarks for comprehensive evaluation of the visualization capabilities of multi-modal LLM agents

-

Visualization + Quantum Computing: Advanced Quantum Visualization

- Develop scalable visualization techniques for complex multi-qubit systems

- Create interactive visualization tools that help users understand quantum computing concepts and quantum programs

- Explore VR/MR approaches for understanding quantum systems

The convergence of these three directions leads to an Intelligent Visual Quantum Development framework that will advance how we approach quantum software development and education. This integrated solution combines:

- LLM-powered quantum code agents and learning assistants

- Natural language visualization interfaces powered by LLM agents

- Interactive quantum system exploration

- Comprehensive evaluation frameworks for both learning outcomes and system effectiveness

The project's impact extends beyond academic contributions through three key dimensions:

- Technical Innovation: Novel integration of cutting-edge technologies and new approaches to quantum software development

- Research Impact: Significant contributions to three distinct fields and creation of new evaluation frameworks

- Practical Applications: Enhanced quantum software development tools, improved quantum education and training, and better understanding of quantum systems

By combining my experience in LLM agent development with NTU's expertise in visualization and quantum computing, this research aims to create new paradigms for human-AI collaboration in quantum software development.